This is Part 2 in a two-part series about topic modeling for qualitative research. In the previous post, we discussed what topic modeling is, how EPAR uses topic modeling, and the technical nitty-gritty of our topic modeling code. We also provided our code for topic modeling using plays from Shakespeare. In this post, we continue using Shakespeare plays as an example and focus on how to interpret the results of topic modeling. Below are our top tips!

1. Work on a Whiteboard

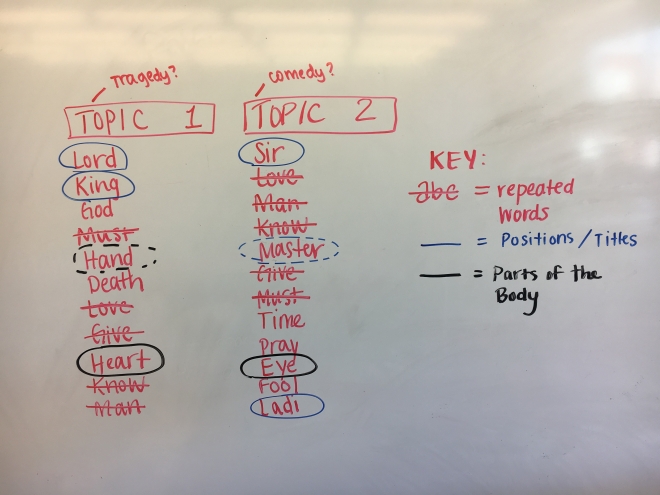

Figure 1. Whiteboard Analysis of Topic Modeling Results on Shakespeare’s Plays (k = 2)

At the beginning of the interpretation process, you will not know what patterns are important or how to group different results. Whiteboards give you the flexibility to try different grouping mechanisms, overlay categories with one another, and erase and start over if an analytical method did not add much value. When classifying Shakespeare’s plays into two topics in Figure 1, we noticed that unique words in both topics described positions and titles like “lord”, “king”, “sir”, and “ladi” (root of “lady” and “ladies”). It is interesting that higher status titles (e.g. “lord”, “king”) were more prominent in Topic 1 whereas lower status positions (e.g. “sir”, “ladi”) were more prominent in Topic 2 and perhaps reflects that characters in tragedies tend to be of higher status than characters in comedies.

2. Start with a Small Number of Topics and Work Outward

One flaw of LDA topic modeling is that the algorithm does not automatically determine the best number of topics (k) for your set of documents. You have to select k from the outset. The Shakespeare example used a very small sample of 37 documents; we first ran the model for two topics and then increased k until the results seemed too fragmented.

Figure 2. Topic Modeling Results on Shakespeare’s Plays, k = 5

|

Topic 1 |

Topic 2 |

Topic 3 |

Topic 4 |

Topic 5 |

|

king |

caesar |

love |

sir |

sir |

|

lord |

hamlet |

lord |

’ll |

duke |

|

queen |

antoni |

know |

ant |

page |

|

duke |

rome |

man |

petruchio |

master |

|

franc |

titus |

must |

dro |

princ |

|

princ |

cleopatra |

give |

berown |

ford |

|

god |

brutus |

sir |

fool |

rosalind |

|

richard |

nobl |

speak |

lear |

falstaff |

|

son |

lucius |

time |

master |

mrs |

As k approaches the number of documents in the sample, the cluster of words for each “topic” started to resemble the individual documents themselves rather than topics among documents. This is a signal that the model has been overfit to a small sample size. For example, in Figure 2 when k = 5 in a sample of 37 plays, we start observing terms or names used only in specific plays (e.g. Topic 2 names characters from Antony and Cleopatra and Julius Caesar).

It is also possible that the process of moving from one number of topics to another to tell a story of its own. Particular topics may be subdivided over and over again, suggesting they were not that coherent to begin with, while others may remain consistent suggesting that the original topic described something in a large number of documents. Choosing the number of topics is not a perfect science, but you now have some intuition.

3. Filter Out Words that Occur Repeatedly & Add Little Value

If there are words that occur across multiple topics, they may not be adding much value to your results and you can consider adding them to your list of “stop words” to remove in the data cleaning process. In Figure 1, the words “must”, “love”, “give”, “know” and “man” occurred in both topics and may simply be common words used in Shakespearean plays. You can repeat the analysis after removing these words and see if the new lists of top words are more helpful for interpreting the results.

4. Investigate Words That Commonly Occur Together

In Figures 1 and 2, some pairs of words stuck together even though we increased k from two to five (e.g. “king" and “lord”, “must” and “give”, “man” and “know”). This could be for multitude of reasons. The most likely and boring explanation is that the words are often used together in syntax (e.g. “must give” rather than “must” and “give”). If your domain knowledge supports this explanation, you can revise the code to look for the full phrase rather than the words individually and run the analysis again to see if the pattern disappears.

A more interesting reason words stick together despite increasing the number of topics is because there is truly a correlation between the two contexts in which they are used. For example, “king” and “lord” in Topic 1 of Figure 2 may not be used together as a phrase, but suggest a broader topic around rankings and titles. In fact, the second spreadsheet of documents and the topics they fall into for k = 5 shows that many plays about historical figures and kings (e.g. Henry IV, King John, Richard II) show a relatively high probability of classification into Topic 1.

5. Pay Attention to Surprises

You may observe words that are grouped together when they should not have been, or words that should have been grouped not be grouped together. These surprises in topic modeling results are welcome and can provide you with hypotheses that you may not have developed otherwise. For example, in Figure 1, it may be surprising that “eye” was placed in Topic 2 while other body parts like “heart” and “hand” were placed in Topic 1. One explanation is that Shakespeare uses body parts as symbols for broader concepts (e.g. eyes are often a metaphor for self-awareness) rather than for literal purposes.

As these five tips show, topic modeling results and the process of interpreting them can help you develop really interesting insights about the problem you are studying. It helps to have a large sample of documents and strong domain knowledge about the documents you are studying, but you can still make interesting discoveries with a small sample and limited information as the examples show. As useful as machine-led analyses can be, remember context is supplied by the human eye and topic modeling is not a deductive approach in itself.

Resources for Learning More About Topic Modeling

- Text Mining with R. Chapter 6, Topic Modeling. Link.

- Topic Modeling with Scikit Learn. Link.

- Patrick van Kessel’s series of posts about topic models for text analysis. Link.

By Namrata Kolla, Rohit Gupta & Terry Fletcher